Bulk test your FAQs

Last updated: 03 April 2024

Manually testing FAQs one by one might feel like a bit of a drag. With the FAQ testing feature, you can bulk test a series of utterances against your FAQs at once.

This feature is only accessible to Owners. If you do not have access to this feature, please reach out to your account manager.

Step 1: Create FAQs

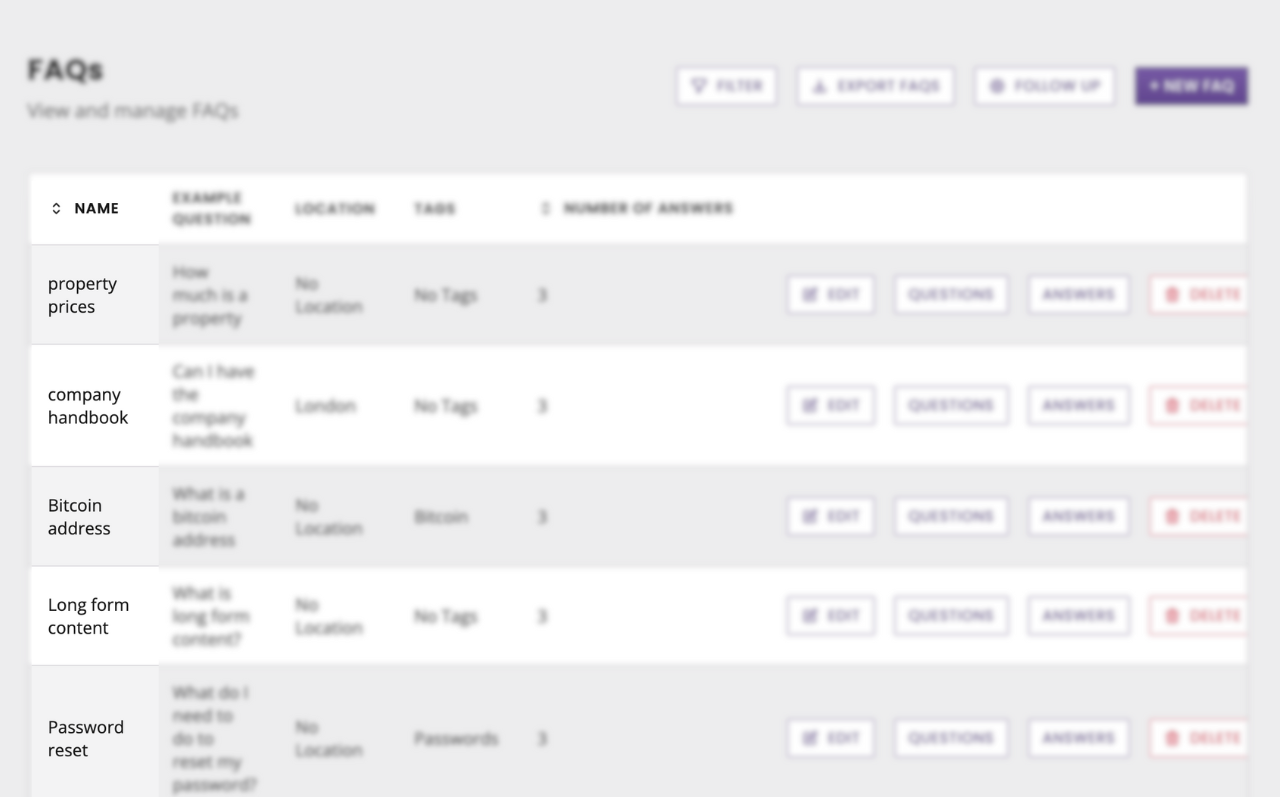

For the purpose of this document, we’ll assume a bunch of FAQs have already been created. If not, please start here: Create FAQs.

Step 2: Create a CSV

To speed up our testing, we’ll create a CSV file that contains:

FAQ IDs: The ID of the FAQ you expect your utterance should trigger.

You can find an FAQ’s ID by going to Knowledge → FAQs and clicking on an FAQ’s Questions. In the URL, you should see the FAQ ID (e.g. https://bots.platform.com/faqs/222/questions)

FAQ names: The name of the FAQ you expect your utterance should trigger.

You can find the FAQ’s name by going to Knowledge → FAQs and looking at the far left column.

Utterances: The utterance you want to test against the FAQ.

You can either write new utterances or pick example utterances from an FAQ by going to Knowledge → FAQs and clicking on Questions.

Your CSV should look like this:

Faq Identifier | Faq Name | Utterance |

|---|---|---|

339 | property prices | How much is a property |

339 | property prices | What's the price of a property |

34 | Password reset | How do I reset my password? |

210 | company handbook | can I have the handbook |

Step 3: Create and run your test

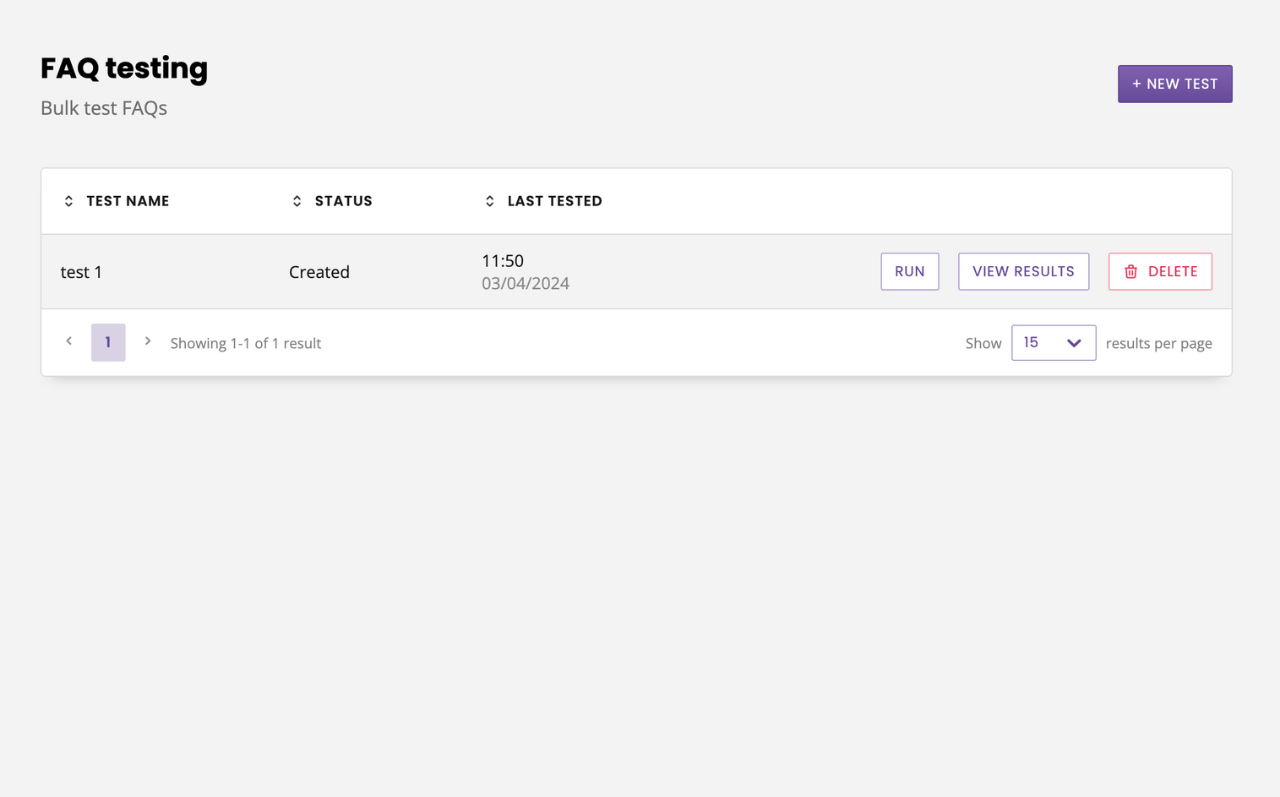

Go to Analyse → FAQ testing and click on New test.

Name your test and hit Next.

Import your CSV.

A test will be created.

Hit Run.

Depending on the size of your CSV and the number of utterances you are testing, the test may take a few minutes.

Once it has finished running, your test will return a Status of:

Success: all utterances have returned the expected FAQ.

Failure: all utterances have failed to return the expected FAQ.

Partial success: some utterances have failed to return the expected FAQ.

Pending: the test is still running.

To dig deeper into the results, click View results.

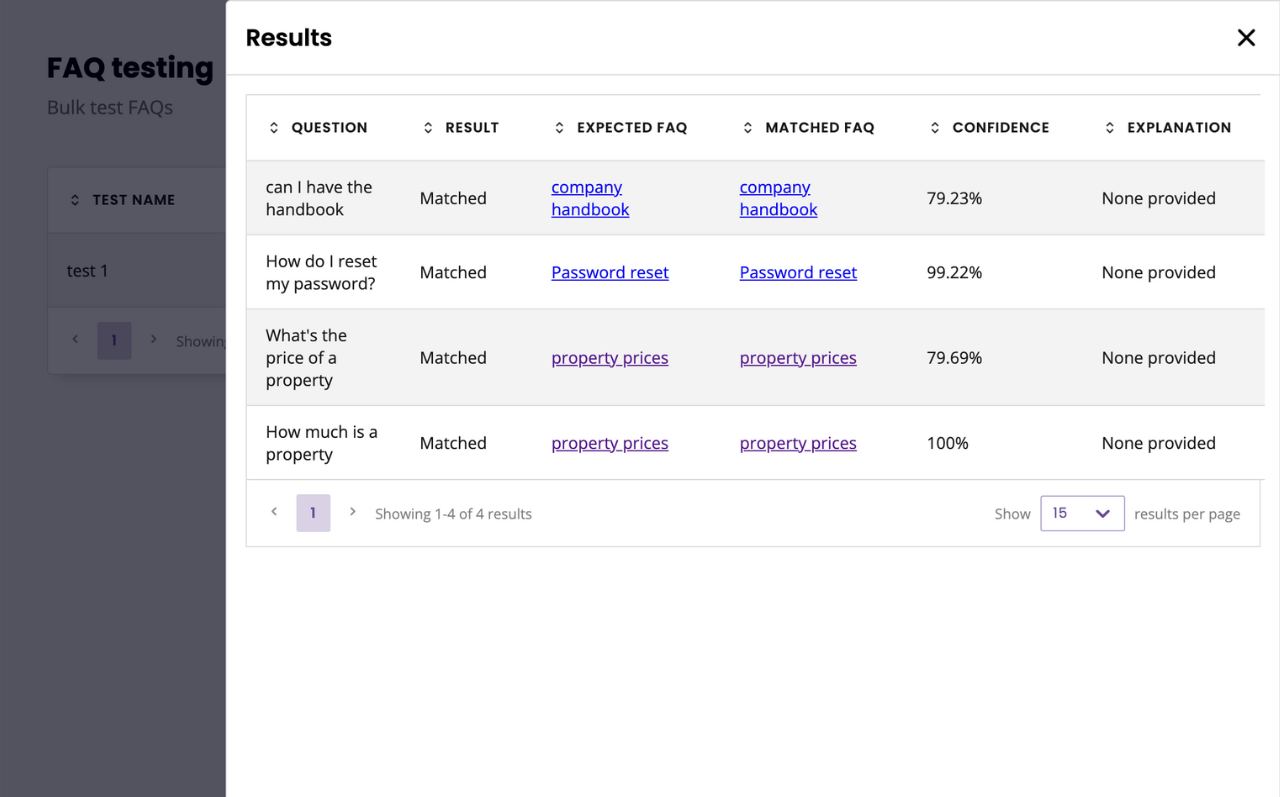

This slide-in shows you:

Question: The utterance tested.

Result: Whether the utterance matched the expected FAQ.

Expected FAQ: The expected FAQ

Matched FAQ: The FAQ returned from the test.

Confidence: How confident the NLP is in its response.

Explanation: When needed, an indication of why the test failed.